Executive Overview

When someone creates an account on a website, that's a vulnerable moment. Bad actors know this and exploit it with fake emails, automated bots, and malicious code hidden in signup forms.

This project demonstrates how to catch threats before accounts are created — not after damage is done.

What I built:

-

A signup system that evaluates risk in real-time

-

Clear rules that decide: allow the signup, challenge it, or block it

-

A complete data pipeline that captures every signup attempt

-

A dashboard showing exactly what's happening and why

Rather than relying on post-login controls, this project shifts security left, reducing fraud, bot signups, and attack surface.

How might we detect and stop bad actors during account creation — before we've given them access — while keeping the signup process smooth and welcoming for legitimate customers?

Problem Statement

Why signup forms are targets

Account creation is one of the most attacked parts of any application:

- Bots flood signups with fake accounts

- Throwaway emails enable spam and abuse

- Malicious code injections probe for vulnerabilities

- Credential testing prepares for future attacks

How most companies handle this

Many organizations rely on:

- Generic security tools that block based on hidden rules

- Alerts that arrive after the account already exists

- Hoping attackers won't find their site

A better approach

This project treats identity as the first line of defense. Instead of reacting after accounts are created, we make explicit, transparent security decisions at the moment someone tries to sign up.

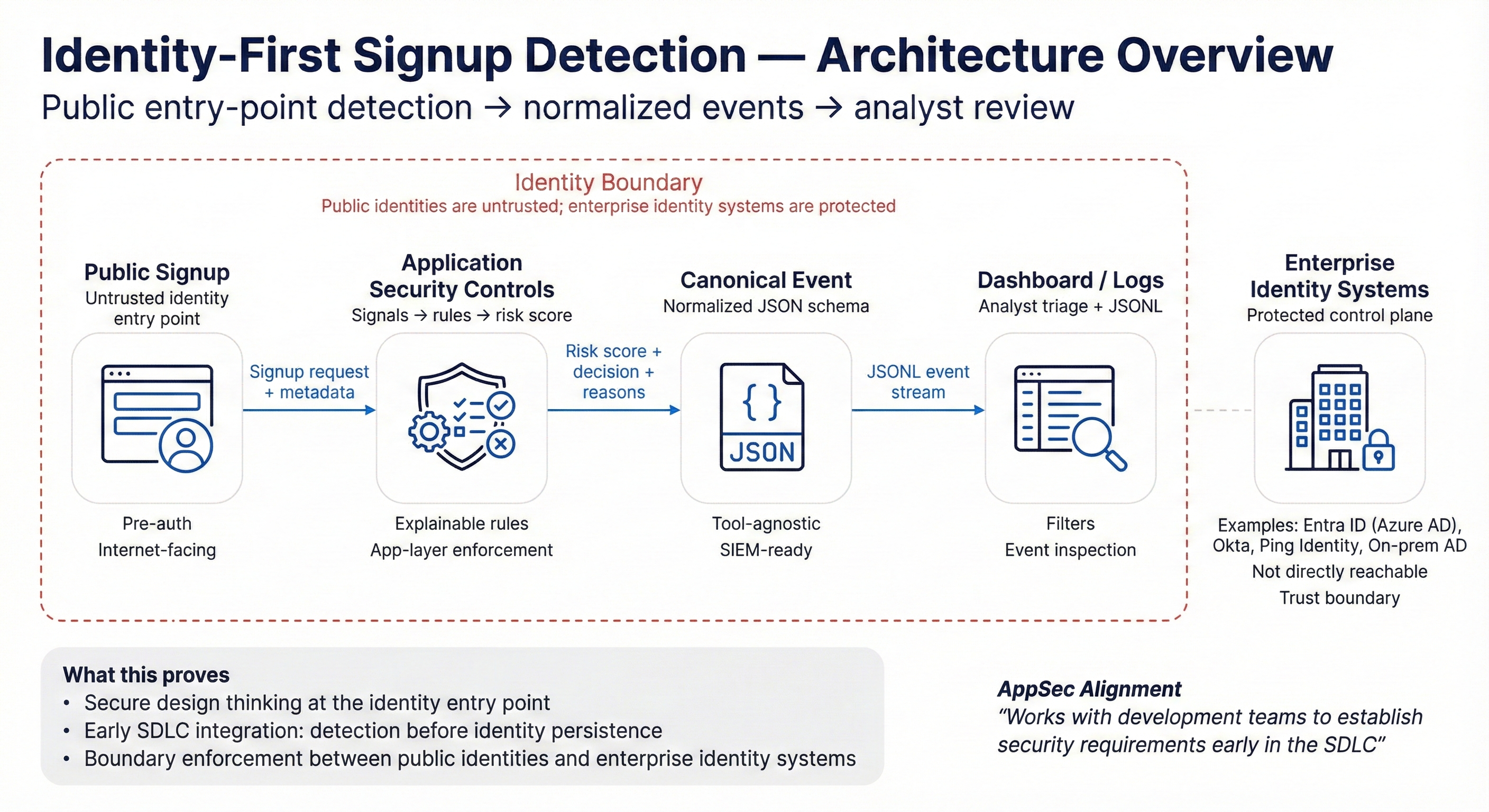

Architecture Overview

Design Principles

Every decision in this system follows four rules:

- Transparent — Analysts can see why a decision was made

- Deterministic — The inputs produce the same results

- Explainable — No "AI magic" or mysterious blocking (AI black-box scoring)

- Auditable — Every event is logged for review

Public Signup Entry Point (Live Environment)

A production-style, internet-facing signup form used as the controlled entry point for identity-first detection. All validation and detection occurs before any account or identity is persisted.

System Components Flow

Phase A - Environment: Public Identity Entry Point

GOAL

The first phase of this project establishes a realistic, internet-facing environment that mirrors how modern consumer applications expose identity creation to untrusted users.

Rather than simulating signup behavior in isolation, a production-style public signup page was deployed as the system’s primary entry point. This interface represents the earliest moment where identity-related trust decisions can be made—before authentication, authorization, or account persistence occurs.

WHAT I IMPLEMENTED

- A functional signup form with basic validation

- Backend system to receive and process signups

- Identity tracking (email patterns, device info, location)

- Complete event logging

BASELINE RESULT

- ✅ Real users sign up without friction

- ✅ No false alarms blocking legitimate people

- ✅ Every signup attempt captured for analysis

By anchoring detection at the public entry point, Phase A establishes the foundation for identity-first security: treating identity creation itself as a control plane, not a passive UX feature.

Phase B — Threat Simulation & Abuse Modeling

GOAL

Validate that the system only responds to real threats — not legitimate behavior that "looks suspicious."

ATTACK SCENARIOS TESTED

I simulated common attacker techniques:

- Disposable Emails: Temporary addresses used to create throwaway accounts.

- Bot Automation: Fake "browsers" submitting forms at scale

- Weak Passwords: Common passwords attackers try first

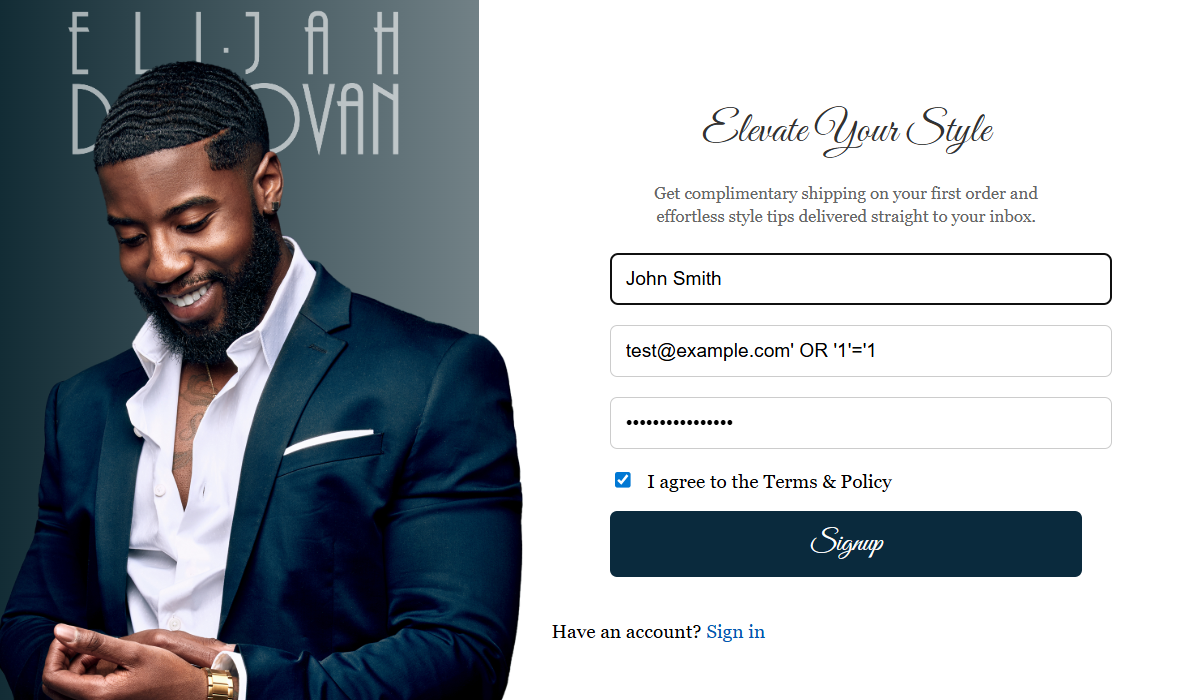

- SQL Injection Attempts: Malicious code hidden in the email field

DETECTION RULES IN ACTION

The system explicitly checks for:

- Known database attack patterns

- Disposable email services

- Bot-like behavior indicators

- Suspicious timing patterns

WHAT THE SYSTEM DETECTED:

- ✅ Bot automation signatures

- ✅ Throwaway email domains

- ✅ Weak password choices

- ❌ Embedded SQL attack (intentionally missed — see below)

ACTIONS TAKEN:

- Allow: Legitimate or low-risk signups (4 attempts)

- Challenge: Suspicious but not confirmed threats (3 attempts)

- Block: High-confidence attacks (3 attempts)

Why the "missed" SQL injection matters

This is actually a success.

The system didn't detect that specific SQL attack because it wasn't told to look for that variant. The detection rules I wrote covered common patterns — but this particular technique fell outside those rules.

Here's what makes this important:

- ✅ The attempt was logged — we have evidence

- ✅ The system behaved predictably — no mysterious failures

- ✅ The gap is documented — we know exactly what to improve

Real security insight:

Detection systems don't "know" what's bad. They only catch what they're explicitly programmed to catch. Understanding your gaps is just as valuable as understanding your coverage.

A SQL injection–style payload was intentionally crafted and submitted through the live signup flow. The payload embedded a tautology within a syntactically valid email field:

Phase C - Enforcement Validation & Analyst Visibilty

Objective

Confirm that enforcement decisions are:

- Deterministic

- Explainable

- Auditable

Enforcement Model

Enforcement model mapping risk levels to actions: Low maps to Allow, Medium maps to Challenge, High maps to Block.

There are:

- No overlapping outcomes

- No ambiguous decisions

- No silent failures

Detection Logic (As Implemented)

SQL injection detection in Phase B relies exclusively on explicitly defined pattern-based rules, including:

- Classic tautologies (e.g.,

OR 1=1) - UNION-based injection patterns

- SQL comment syntax (

--,/* */) - Pattern matching on specific request fields

The system does not perform semantic parsing, heuristic inference, or normalization of identity fields prior to evaluation. This constraint is intentional and central to the project’s detection-engineering philosophy.

Observed Detection Outcomes

Signals Observed

- Suspicious User-Agent detected (

sqlmap,curl, headless tooling) - Disposable and anonymous email domain detection

- Weak or common password indicators

- No SQL injection signal triggered for the tautology embedded in the email field

- All signup attempts successfully ingested and logged to BigQuery

Enforcement Decisions

- Allow: Low-risk signups from legitimate domains and ambiguous inputs lacking explicit rule coverage

- Challenge: Medium-risk signups involving disposable identities or automation indicators

- Block: High-risk signups where multiple strong indicators converged

Risk Distribution

- High: 3 events — automatically blocked

- Medium: 3 events — challenged

- Low: 4 events — allowed

Key Observation

The system behaved exactly as engineered. The SQL injection–style payload was logged and analyzed, but not classified as SQLi because it did not match any explicitly defined detection rules. This outcome reflects a detection gap by design, not a system failure.

This reinforces a foundational detection-engineering principle: systems only detect what engineers intentionally encode.

Detection Gap Analysis

- SQL injection attempts embedded within syntactically valid identity fields

- Attacks that evade static pattern matching without normalization

- Lack of cross-field semantic correlation at signup time

This gap is valuable. It exposes the limits of static rules, highlights the responsibility of detection engineers rather than tooling, and provides a clear roadmap for future improvements.

Open Questions for Phase C

- How should identity fields be normalized prior to detection evaluation?

- Which additional SQLi intent patterns should be explicitly enumerated?

- Should SQLi detection be correlated with automation and identity signals?

- Where does expanded pattern coverage risk false positives at signup time?

- How can “allowed but suspicious” events be leveraged for future correlation without retroactive enforcement?

Technology Stack

Frontend:

- HTML, CSS, Javascript

- Static site hosted on Google Cloud Storage

- Custom domain (elidon.huntercloudsec.dev)

Backend/Processing:

- Python (FastAPI)

- Deployed as a serverless service

- Receives signup submissions and emits security events

- No account persistence by design

Artifacts & References

-

Live Environment (Controlled)

elidon.huntercloudsec.dev

Public, internet-facing signup surface used to observe pre-authentication identity signals. No accounts are persisted; all activity is logged for detection research and analysis.

- Detection Architecture Identity inspection layer, normalization pipeline, and deterministic enforcement logic documented in system diagrams and flow models.

- Canonical Event Schema Normalized JSON event model capturing signup attempts, identity attributes, detection signals, and enforcement outcomes for downstream SIEM compatibility.

Security Detection:

- Rule-based detection logic

- Pre-auth identity signal capture: Email attributes, User-agent strings, Submission metadata

- Weighted risk scoring

- Explainable decision output (allow / flag)

Event & Logging:

- Canonical security event schema (JSON)

- Runtime output in JSON Lines (JSONL)

- Weighted risk scoring

- Compatible with SIEM ingestion pipelines

{

"event_type": "signup_attempt",

"event_time": "2026-01-07T15:00:00Z",

"identity": {

"identity_type": "email",

"email_domain": "example.com"

},

"request": {

"source_ip": "34.120.10.40",

"user_agent": "curl/8.0"

},

"detection": {

"risk_score": 90,

"risk_level": "high",

"reasons": [

"Injection pattern detected in email field"

]

},

"decision": {

"action": "block",

"confidence": "high"

}

}Tradeoffs & Limitations

The system intentionally excludes real-time streaming, IP reputation feeds, and machine-learning models. These capabilities were deferred to preserve clarity and focus on core detection concepts. The architecture remains extensible without requiring changes to the event schema or detection interface.

Key Takeaways

Security decisions should happen before trust is granted, not after it is abused.

By applying detection early, normalizing events into a consistent format, and prioritizing explainability, this project demonstrates how identity-first detection can significantly reduce downstream risk while remaining practical for security teams to implement and maintain.

The approach is grounded in real-world AppSec practices: treating public signup as a security control point, keeping detection logic transparent, and ensuring the system integrates naturally with existing security tooling and workflows.

Lessons Learned

- Pre-authentication telemetry is often overlooked but provides the highest-leverage detection point

- Risk signals can be inferred without requiring full identity establishment

- Early enforcement dramatically reduces downstream remediation costs

- Dashboards must reflect security meaning, not just event counts

What Comes Next

SHORT TERM IMPROVEMENTS:

- Normalize identity fields before inspection

- Expand SQL injection pattern coverage

- Add cross-signal correlation

FUTURE ENHANCEMENTS:

- Controlled false-positive testing

- Integration with downstream fraud systems

- Adaptive rule tuning based on real attacks